A complete suite

of technology

and solutions

It’s a complex ever-changing world out there, and your autonomous vehicle needs to navigate it safely. But getting your AI on the road can be delayed by an expensive cycle of testing and data collection.

aidkit breaks the cycle and gets your processes flowing through comprehensive testing and guidance for lean data collection.

No matter the model, scene, or ODD, aidkit ’til you make it.

aidkit

Harmonize safety and AI for effective development

aidkit is the toolkit to test the perception functions of ADAS/ADS and ensure they are safe for deployment.

By testing the model at the heart of your perception component for robustness against a range of altered scenes, aidkit ensures reliable performance in your ODD.

Where performance falters, aidkit guides your data campaign so you know how to improve.

aidkit integrates into existing workflows across your team and is optimized to reduce time to deployment though useful features such as scaling, data lineage, and automatic retraining.

Deploy a safer perception component with the empirical evidence to back up your safety claims to safety managers and external regulators.

Testing

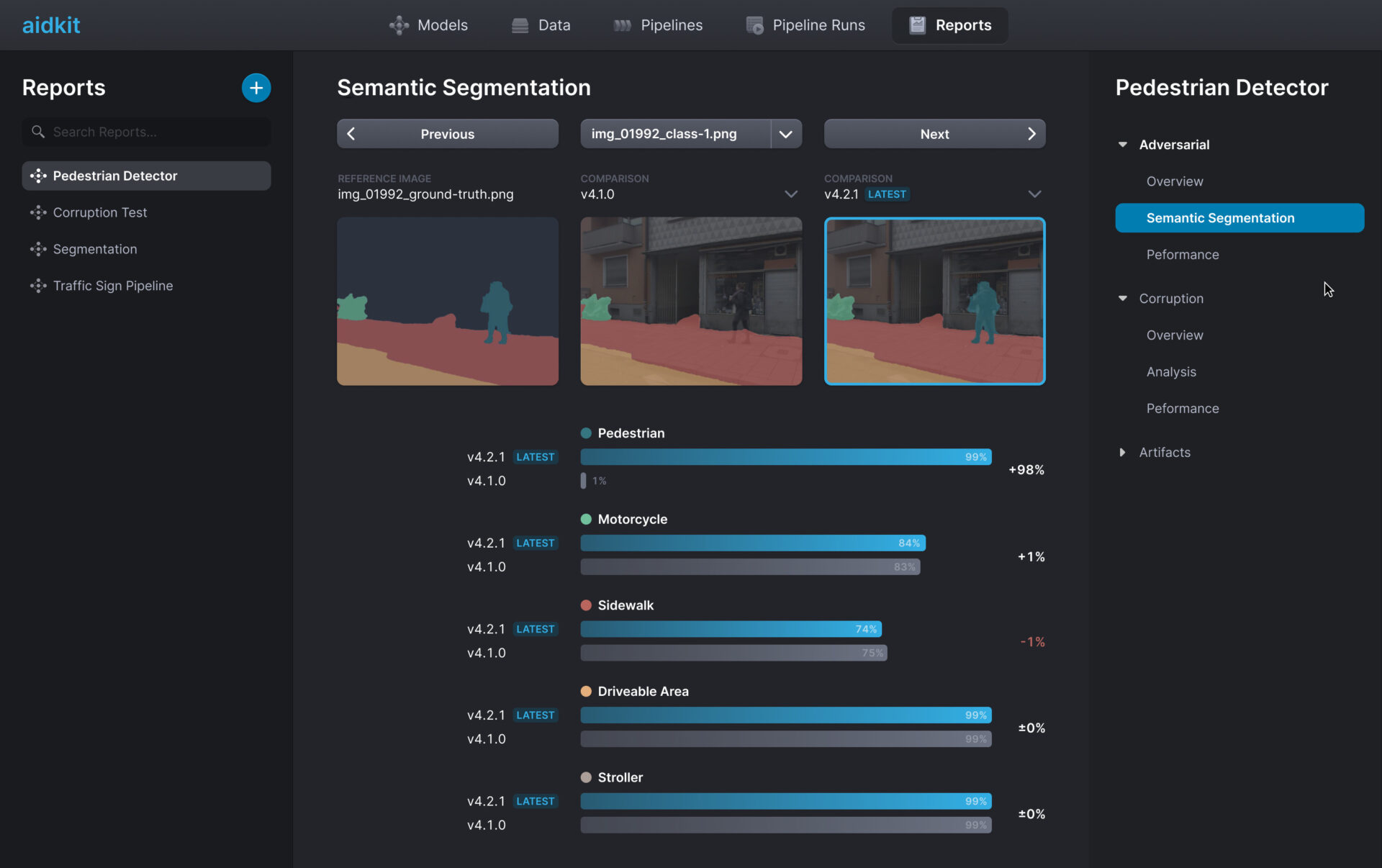

Automate perception component testing

aidkit generates a wide range of corrupted images for performance- and worst-case analysis to ensure the robust handling of common cases and identify edge and corner cases within and around your ODD.

Data

Guide lean and targeted data campaigns

Testing with aidkit allows you to know exactly what data to collect and label to meet your performance shortfalls so you can tailor your data campaign to fill gaps without extraneous collection and labeling.

Safety

Translating ML testing into explainable safety arguments

aidkit provides you with explainable, evidence-based risk scores containing impact and probability of occurrence for every tested ODD scenario and a complete record of how you got your results.

aidkit: One tool for all your tasks

Simplify and master perception models

By augmenting data with perturbation-based image operations, aidkit can test the performance of perception models under the various conditions that are possible in an ODD but not easily captured in fleet data. This technique fills the gap between simulation and reality. Along with real-world data for initial training and simulation for later testing, augmented data should be a pillar in your methodological triangulation: the key to a strong scientific basis and confidence in your AI model.

aidkit makes these complex ideas and tasks easy to implement and understand, allowing you to translate the requirements of current and upcoming safety standards into clear technical pass/fail criteria and then clearly communicate you've met the criteria.

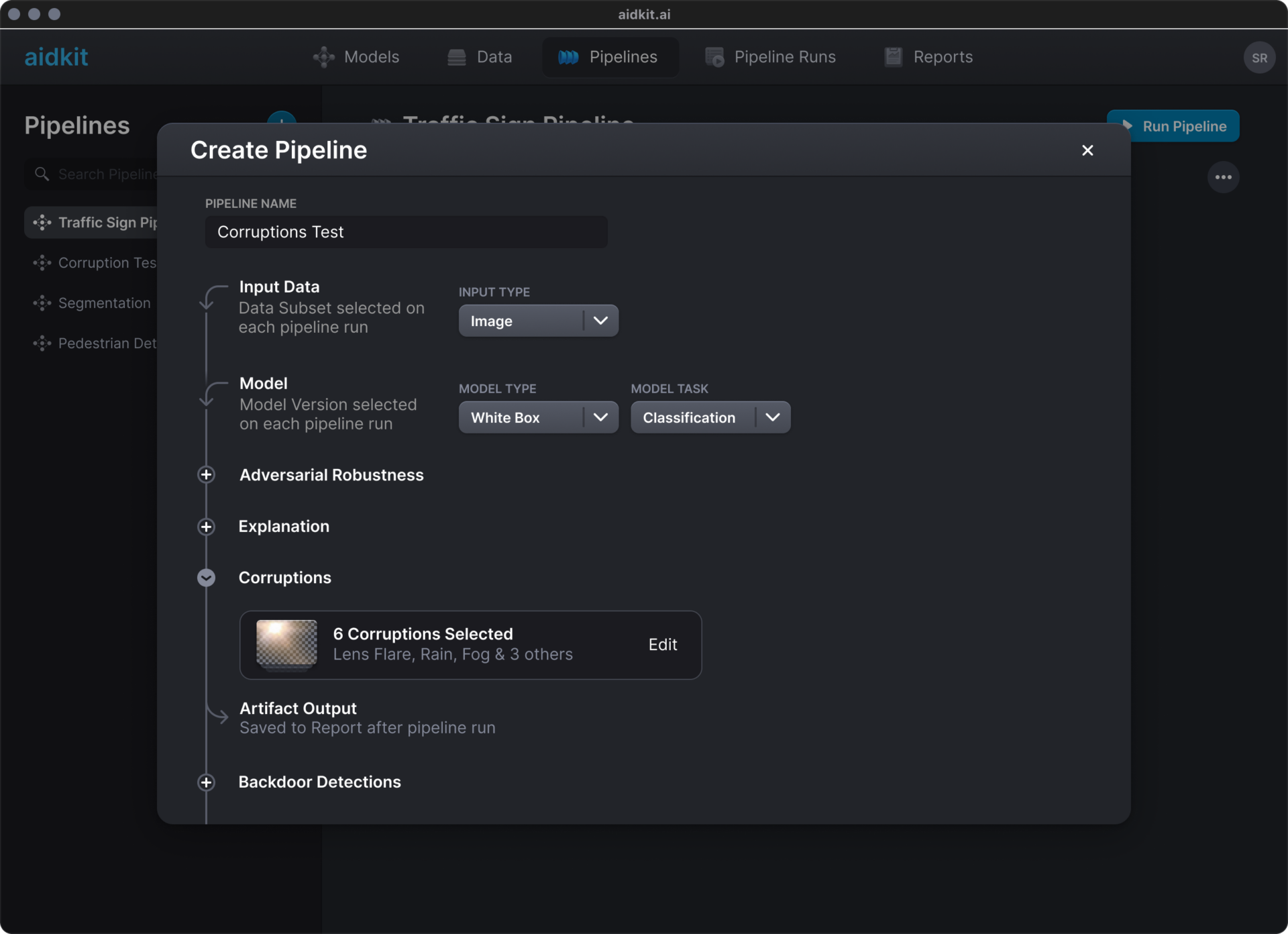

01

Identifies insufficient performance

aidkit can test segmentation, detection, or classification models from PyTorch to Tensorflow

02

Setup the testing

Choose your metrics, set the parameters, and customize the corruptions for your tests

03

Run your tests

Have a coffee break, but with scalability and interim monitoring don’t get too comfortable!

04

Review your results

Get your risk scores reports and other statistics to present your safety case or plan how to improve

05

Iterate for improvement

Automatically use artifacts for further testing and see where you need more data

Driving safe perception

Continue your journey with us

Don’t just manage risks. Mitigate your perception system’s chances

of encountering unknown situations and enhance its resilience.